About a year ago, we started working on the Run Insights feature for CloudBees DevOptics. A small team of engineers (averaging around three to four of us) worked on the Run Insights service over the course of the past year. The Run Insights service gave us the opportunity to develop a greenfield service using continuous deployment from the very start.

One year, and over 343 deployments later, I thought it would be a good idea to share what we have learned from our experience.

CloudBees DevOptics Run Insights architecture

There were a couple of design decisions that we made on the basis that we wanted to use continuous deployment from the start.

When you are doing continuous deployment, you cannot control the time when new versions get deployed. Any down-time during deployment would not fill our users with joy. This means that you need a zero down-time deployment strategy.

We decided to implement Run Insights as a single microservice in control of its own data stores and with a Blue-Green deployment model. A Blue-Green deployment model means that during deployment both the old (blue) and new (green) versions of the service will be serving traffic, letting us warm up the new version’s instances before destroying the old version. If we encounter issues with the new version during deployment, we can safely kill the new version confident that users will continue to have their requests served.

In picking the Blue-Green deployment model, we need to worry about the data schemas in our data stores. New versions of the service will evolve the data schema, and we want this evolution to be automated.

Our approach has been to make schema changes in such a way that the schema can always be consumed by the previous deployed version.

The majority of our schema changes have been adding new columns or tables, which does not break the older version.

With breaking changes, we try to split them up into a series of deployments. For example, if we wanted to rename a column in a SQL table, one way we can handle the rename is:

-

Create a temporary view of the backing table and update the code to use the view rather than the table directly

-

Rename the column, update the view and update the code to use the table with the new column name

-

Delete the view

While this is not as easy as just renaming the column it has the property that each deployment can be run concurrently with the previous deployment.

We have three different data stores:

-

PostgreSQL is used for data that requires transactional consistency. We use FlywayDB to manage the schema.

-

Elasticsearch is used for the metrics data that Run Insights uses for reporting. We were able to use aliases and our own custom schema migration code to handle the very specific subset of schema migrations that the data we store in Elasticsearch is subject to.

-

Redis is used as a cache for more costly computational results. We use a version string as part of the key prefixes to manage the schema of objects in the Redis cache. In the event that the schema changes we will have two versions in the cache until the old deployment is stopped.

With both of the above: blue/green deployment and schema management, we have found one golden rule for our codebase:

Never roll back, only move forward.

Deployment of a version older than the current blue version would require restoring the PostgreSQL and Elasticsearch data (to roll back schema version changes) which would result in both loss of data as well as significant downtime.

This brings us neatly to the topic of deployment.

We deploy automatically from the master branch. We considered other schemes, for example - at the time we started - the CloudBees DevOptics Value Streams service used more of a GitOps style flow and triggered deployment by pushing the changes to the production branch. What we wanted is to leverage the knowledge that merging to master will deploy to production to improve code quality and ensure engineers would write good tests before merging.

We have three production-like environments which all have the Blue/Green deployment functionality:

-

Integration

-

Staging

-

Production

The main difference between the Integration and Staging environments is the security controls and operational policies that apply to the Staging environment. The additional controls we apply to the Staging environment allow us to load a production data dump into Staging for where we need to validate schema migration that includes data mutation.

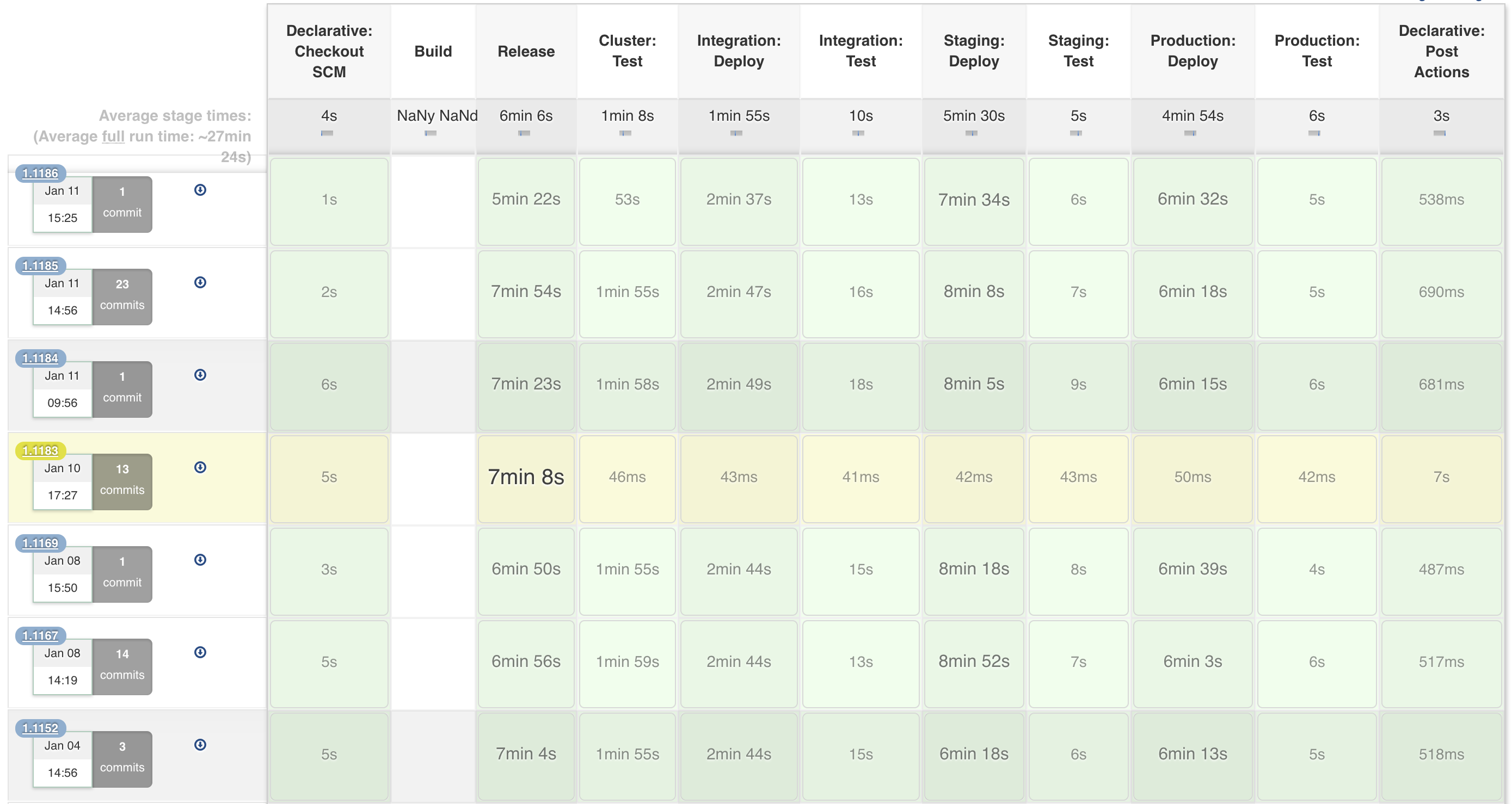

Our deployment flow looks a little like this:

Some things to note:

-

We run load test and acceptance tests as part of each deployment in each environment. If the tests fail in a specific environment, we stop the new version and leave the old version serving traffic.

-

If the deployment fails in staging or production, we only revert that environment, we do not revert the previous environments.

-

This deployment model means that all feature development happens through pull requests.

What worked

Blue-Green deployment

-

This has been wonderful. We have had zero unplanned downtime.

We have had planned downtime, for example when operations needed to make infrastructure changes and down-time could not be avoided.

-

Continuous deployment culture

-

-

Once you know that merging the Pull Request means the code will end up in production, you write tests, always . Tests are the only thing that can stop broken code from ending up in production. This simple fact psychologically changes how you think about testing.

-

Because failed tests stop deployments, we show zero mercy for flakey tests. A flakey test gets fixed or rewritten as soon as we spot it.

-

Deployment has become a non-event. The pull requests get verified by Jenkins before we merge. Because we have good tests, pull request verification is trustworthy. In general, we just merge the pull request and move on: worst case Jenkins will send us a notification of the failure - before production - and we can then fix the issue.

Even with all this, we still try not to merge pull requests on Friday afternoons.

-

-

Acceptance tests stopped broken deployments

-

We are not perfect. Sometimes when we merge a Pull Request the acceptance tests will fail. One example is around authentication: pull requests are validated against a throw-away environment that is not connected to our real authentication service. This means that we cannot run some of the acceptance tests as part of the pull request verification.

But, our acceptance tests catch those kinds of issues and prevent the broken deployments.

What went wrong

-

Elasticsearch schema migration

-

- Our first version of our custom Elasticsearch schema migration code was basically a 500 line mega function. We had black box tests that verified everything worked and covered all the logic branches and the code coverage was high. However , there was a timing bug that only surfaced when there was a lot of data to update (as in several months worth).

- Our test cases used generated data with only a few data points because they would run slow if we had to inject lots of data.

- There was much fun when the production deployment failed for the first schema migration after go-live.

- Our application start-up for the green nodes was failing during schema validation because the schema validation code couldn’t complete.

Outcome

Thankfully our Blue-Green deployment meant that the blue nodes were still serving customer requests and we were able to refactor the 500 line mega function into a more testable composition of small functions.

- More tests meant that we found and fixed the timing bug (without needing large test datasets).

- We changed our processes and Pull Request review template to require validation of schema migration pull requests against a dump of the production database.

-

Poor test coverage

-

- When we added the ability to filter Run Insights by master, one of the end-points just had a single "smoke test".

- When the front-end team started adding the UI to actually invoke this end-point, they discovered some bugs in the implementation.

- Poor test coverage meant that we had broken code deployed in production. Thankfully, this was not user facing - because the updated front-end using the new end-points had not been deployed.

Outcome

We added more tests, found and fixed the bugs and re-enforced our culture of writing tests always .

Want to learn more about CloudBees DevOptics ? Sign up for a free trial .

Additional resources

- Read more about visualizing value streams

- Learn how to measure DevOps performance

- Find out more details about CloudBees DevOptics features