This is part of a series of blog posts in which various CloudBees technical experts have summarized presentations from the Jenkins User Conferences (JUC). This post is written by Harpreet Singh, VP Product Management, CloudBees about a presentation given by Julien Pivotto of Inuits at JUC Berlin .

Inuits is an open source consultancy firm that has been an early practitioner of DevOps. They set up Drupal-based asset management systems that store assets, transcode videos for a number of clients. These systems are setup such that each client has a few environments (Dev/UAT/Production) and each environment further splits into a one backend per environment and a number of front ends per backend. Thus, they end up managing a lot of pipelines for each Drupal sites that they setup. Consequently, they need a standard way of setting up these pipelines.

In short, Inuits is a great use case for DevOps teams that are responsible for bringing in new teams and enabling them to deliver continuously and deliver software fast.

There are simple approaches to building pipelines through the UI (clone-a-job) and through xml (clone config.xml) but these approaches don't scale well. Julien outlined two dis tinct approaches to setting up pipelines:

- Pipelines through Puppet

- Pipelines through Jenkins plugins

I will focus mostly on the puppet piece in this blog as that seems to be a novel approach that I haven't come across before. Although, Julien does lean towards using standard Jenkins plugins to deliver these pipelines.

Pipelines through Puppet

Julien started with a definition of a pipeline:

Julien started with a definition of a pipeline:

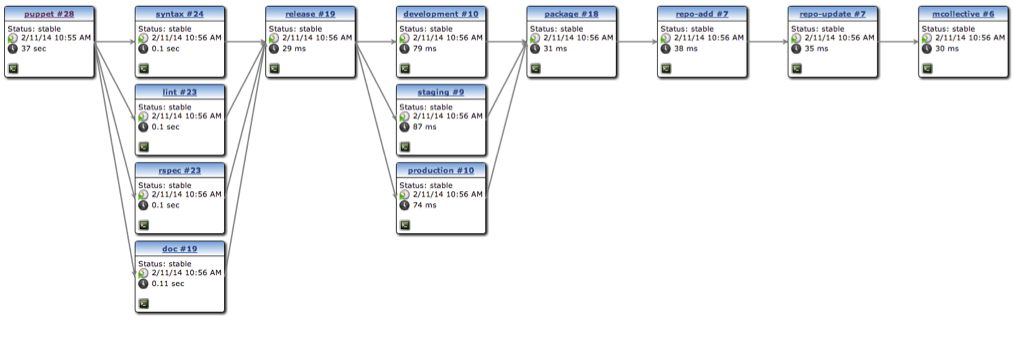

A pipeline is a chain of Jenkins job that are run to fetch, compile, package, run tests and deploy an application

And then he goes about how to set these chain of inter-related jobs through Puppet. Usually, Puppet is used to provision OS, Apps and DB but not application data. In his approach, he puppetized provisioning Jenkins and job configurations (application data).

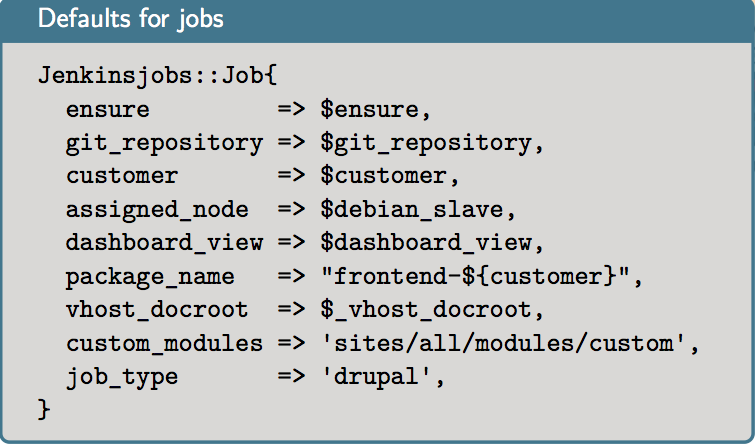

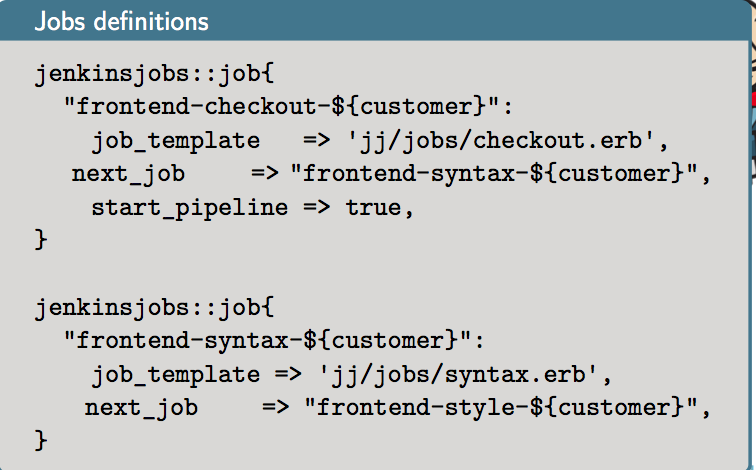

Each type of job and pipeline has a corresponding puppet manifest that takes arguments like job name, next job, parameters etc. Since the promotions plugin adds some meta-data into an existing job config and adds a separate configuration folder in the jobs folder, promotions have their manifest as well. Configuration changes in the xml are done through Augeas .

With the above approach on-boarding a team is easy: puppet provisions a new Jenkins with its own set of pipelines and jobs. History of configuration changes can be tracked in the source repository.

And then he goes about how to set these chain of inter-related jobs through Puppet. Usually, Puppet is used to provision OS, Apps and DB but not application data. In his approach, he puppetized provisioning Jenkins and job configurations (application data).

Each type of job and pipeline has a corresponding puppet manifest that takes arguments like job name, next job, parameters etc. Since the promotions plugin adds some meta-data into an existing job config and adds a separate configuration folder in the jobs folder, promotions have their manifest as well. Configuration changes in the xml are done through Augeas .

With the above approach on-boarding a team is easy: puppet provisions a new Jenkins with its own set of pipelines and jobs. History of configuration changes can be tracked in the source repository.

However delivering these pipelines gets hard because you end up with a lot of templates. Each change to configuration requires restart to Jenkins which impacts team productivity.

Delivering pipelines through puppet is the infrastructure as code approach and although the approach is novel the disadvantages outweigh the benefits and Julien leaned towards using Jenkins plugins to deliver these.

Pipelines through Jenkins Plugins

Julien talked about two main plugins to realize pipelines. These plugins are well known in the community. The novel approach is connecting these two together to deliver dynamic pipelines.

Build_flow plugin : define pipelines through groovy DSL's and constructs to do parallels, conditionals, retries and rescues.

Job generator plugin : create and updates a new job on the fly.

Julien then combines them both where starting jobs (an orchestrator) are created using build flow and subsequent jobs are generated by job generator. Using conditionals and parallel constructs, he can end up delivering complex pipelines.

The above approaches highlight two things:

- Continuous delivery is becoming the de-facto way organizations want to deliver software and

- Since Jenkins is the tool of choice for delivering software, it has to evolve and offer first class constructs to help companies like Inuits to deliver pipelines easily.

We at CloudBees, have heard the above loud and clear in the last year. Consequently, the workflow work delivered in OSS by Jesse Glick offers these first class constructs to Jenkins. As this work moves towards a 1.0 in OSS, we will get to point where the definition of a pipeline will change from

A pipeline is a chain of Jenkins job that are run to fetch, compile, package, run tests and deploy an application

to

A workflow pipeline is a Jenkins job that describes the flow of software components through multiple stages (& teams) as they make way from commit to production.

-- Harpreet Singh

Follow Harpreet on Twitter .